The technological giant, Meta, recently hit a roadblock in its plans to train its large language models (LLMs) with public content from European users. The Irish Data Protection Commission (DPC) on behalf of the European DPAs has requested that Meta delay these efforts, leading to a significant pause in their AI ambitions across the European Union.

The main point of contention is Meta’s plan to use personal data without explicitly asking for users’ consent. Instead, Meta wanted to rely on the concept of “Legitimate Interests” to process data. This approach allows companies to use data if they believe it is in their legitimate interest and not overly intrusive to privacy. However, many in Europe, including regulators and advocacy groups, feel this doesn’t sufficiently protect users’ privacy rights.

Meta’s Response

Meta expressed its disappointment, emphasizing that the delay is a setback for innovation and AI development in Europe. They argue that using local data is essential to offer a high-quality experience, capturing diverse languages and cultural nuances. Without this data, Meta claims their AI models would provide a “second-rate experience” to European users.

Stefano Fratta, Meta’s global engagement director of privacy policy, mentioned that Meta has always aimed to comply with European laws and regulations and that their methods are more transparent than many competitors. However, Meta’s transparency is under scrutiny, especially after the complaints filed by the Austrian non-profit group, noyb (none of your business).

Privacy Concerns and Legal Hurdles

Noyb’s complaints highlight a significant concern: Meta’s use of data without clear consent might violate the General Data Protection Regulation (GDPR). The GDPR is a robust privacy law that requires companies to obtain explicit consent from users before processing their data. Noyb’s founder, Max Schrems, pointed out that Meta’s broad claims about data usage are the opposite of GDPR compliance. He argues that Meta could proceed with its AI plans if it simply asked for users’ permission.

The Irish DPC’s intervention isn’t just a hurdle for Meta; it’s a broader statement about the importance of privacy and user consent in the age of AI. The DPC and other European data protection authorities are keen on ensuring that any AI development respects users’ privacy rights from the outset.

However, this clearly highlights part of a larger situation. The pause in Meta’s AI training efforts reflects a larger tension between tech innovation and privacy rights – like the new EU AI Act.

The Broader Picture

On one hand, companies like Meta argue that access to vast amounts of data is crucial for developing advanced AI that can benefit users. On the other hand, privacy advocates and regulators insist that such developments must not come at the cost of individuals’ rights to privacy and control over their data.

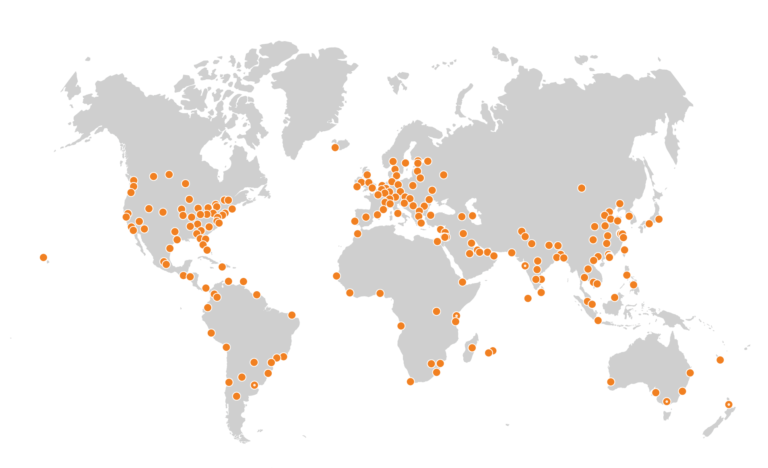

The decision also has significant implications for AI development in Europe. Meta argues that without the ability to use local data, European AI development will lag behind other regions, particularly the U.S., where Meta is already using user-generated content for AI training.

As Meta works with the DPC and other European regulators, the outcome of this situation could set important precedents for how AI is developed and deployed, not just in Europe, but globally. If Meta finds a way to navigate these regulatory challenges, it might pave the way for more balanced approaches that respect user privacy while promoting innovation.

For now, European users will have to wait and see how Meta adapts its AI strategies to align with privacy laws.

— BlackoutAI editors

[…] Meta Led to a Temporary Halt in the Training of Their Large Language Models […]

[…] now find themselves in. Every move has the potential to either create innovation or impose unintended limitations. For global infrastructure providers like Cloudflare, this game is especially intricate, involving […]